Blog Post

Tuning Node.js and V8 settings to unlock 2x performance and efficiency

Kubernetes OptimizationNode.js has emerged as a go-to server-side runtime environment for building high-performance web applications and is ranked among the top 3 popular languages in Kubernetes environments.

At the heart of Node.js lies V8, Google’s high-performance JavaScript engine. V8 provides 100s of configuration options and comes with default settings designed to work in a wide range of scenarios. However, these defaults might not be optimal for your specific applications and even lead to performance bottlenecks or unnecessary high resource usage.

Contrary to other popular runtimes like the JVM, .NET, and Golang, tuning V8 configurations is not an established and well-documented approach to optimize Node.js applications. As a result, most Node.js applications today run with default settings, leaving significant optimization potential on the table.

In this post, we describe how V8 memory management works and what are some of the key parameters that can be tuned. Then, we show the results of optimization studies we have conducted and how to achieve 45% gains in Node.js applications performance and 68% CPU usage reduction (hence cloud costs) by optimizing V8 configurations, all without touching the application code.

The Node.js performance stack

When thinking about performance and optimization opportunities, it’s important to understand that Node.js applications run on top of a technology stack, consisting of several layers each contributing to the overall application performance and resource usage.

Here’s the performance stack of a Node.js application running on Kubernetes:

In this post, we’re focusing on the V8 engine. Before deep-diving on it, let’s briefly describe the other components in the stack:

- Application code: the JavaScript code that powers your application.

- Node.js core: provides the APIs that you leverage to build your applications and the runtime system based on Libuv and the V8 engine.

- Libuv: a multi-platform support library that handles asynchronous I/O operations, including worker threads that execute tasks that are too time-consuming to be handled directly within the Node.js event loop.

- Kubernetes pod: defines the CPU and memory resources (requests and limits) that the application can use, and this is crucial to ensure high-performance, cost-efficient, and reliable Node.js applications – this is a tricky process on its own, more on this in a future blog post.

The V8 engine

V8 is Google’s open-source, high-performance engine for JavaScript and WebAssembly. The V8 engine’s primary role is compiling and executing JavaScript code via Just-In-Time (JIT) compilation, as well as managing memory.

The first interesting aspect is that the V8 runtime is used for both server-side applications via Node.js and in web browsers such as Chrome. This is an important aspect, as those are two very different scenarios from a performance and efficiency perspective.

How does V8 navigate the tradeoff between application latency, throughput, and resource footprint? This is a complex design space for language runtimes, with many trade-offs (the same is true for Java and the JVM – see here. More on this later.

The second notable aspect that is less known about V8 is that it’s a highly configurable engine. As an example, the recently released Node.js version 22 has almost 800 configuration options for V8 only!

docker run --rm node:22 node --v8-options | grep -E "^ --" | wc -l

793

Even more interesting, if we look at the number of V8 options across Node.js versions, we see a steadily increasing trend.

What does that mean?

V8 is widely used in many diverse scenarios and it’s constantly being improved. More and more options are being added to tweak the runtime behavior. These knobs offer performance engineers and SREs a goldmine of optimization opportunities to extract more performance and efficiency out of Node.js applications. All without touching the JavaScript code!

In this post, we will focus on V8 memory management and some of the key configuration settings we can tune to optimize application performance and resource efficiency.

V8 memory management

Automatic memory management is a key benefit V8 provides to Node.js developers. But at the same time, it’s a crucial area that impacts your overall application performance and resource usage (hence cloud costs).

V8 engine manages memory via garbage collection. V8 provides a highly optimized, generational, stop-the-world garbage collector (GC) which is considered one of the keys to V8 performance.

Stop-the-world GC means that, when GC runs, the JavaScript code is stopped (i.e, your user requests will wait). This is why ensuring GC runs smoothly is paramount to maximizing application performance.

Generational means that most JavaScript objects die quickly after creation, while a small part lives longer. V8 leverages this hypothesis and divides the heap memory into two main pools: the Young (or New) generation, and the Old generation.

The young generation is where new objects are allocated. It is further divided into 2 semi-spaces equally sized (the To-space and From-space). By default, the young generation size is small (16 MB). A small young generation can cause excessive GC work, which in turn can impact application performance and CPU usage. The size of the semi-spaces is automatically selected by V8, but can be overridden via the –max-semi-space-size command line option:

docker run node:22 node --max-semi-space-size=256 <your-node-app.js>

The old generation stores objects that survived a number of GCs in the young generation. This space is usually bigger than the new space (up to 2GB). The size of the old generation is automatically selected by V8, but can be overridden via the –max-old-space-size command line option:

docker run node:22 node --max-old-space-size=1024 <your-node-app.js>

The young and the old generation are part of the overall heap. The heap also contains other spaces, which we won’t describe in detail in this post, as they are typically less important from a performance perspective.

Again, V8 automatically selects the maximum size of the heap (more on this later), but it can be overridden via the –max-heap-size command line option:

docker run node:22 node --max-heap-size=2048 <your-node-app.js>

We are now armed with a basic understanding of Node.js and V8 memory management and some of the most important configuration options. Let’s now see the impact performance tuning Node.js heap generations can have on your application performance and efficiency.

V8 heap tuning experimental setup and results

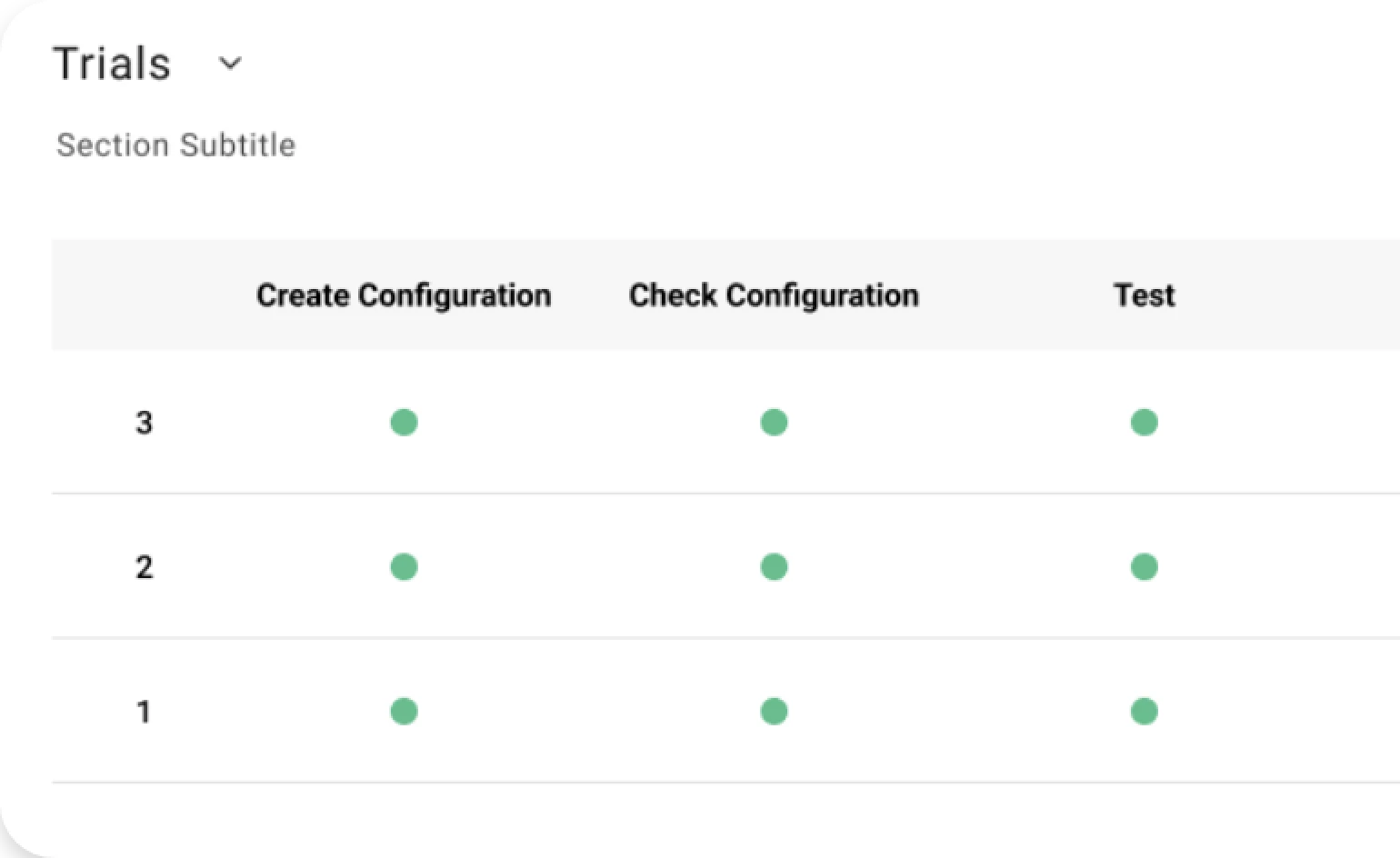

To explore the impact of tuning V8 heap generations, we set up an automated benchmarking process to launch a Node.js application under different configuration options and measure the corresponding performance metrics. Here’s our experimental setup:

- Application: We leveraged web-tooling-benchmark, a standard benchmark that is designed to measure the performance of common JavaScript workloads.

- Configuration parameters: We tested different values of V8 young and old generations size, namely –max-semi-space-size and –max-old-space-size.

- Performance metrics: We measured benchmark execution time and total CPU time used by the benchmark.

- Execution environment: We used Node.js v18. All experiments were run on EC2 instances.

So what results did we get?

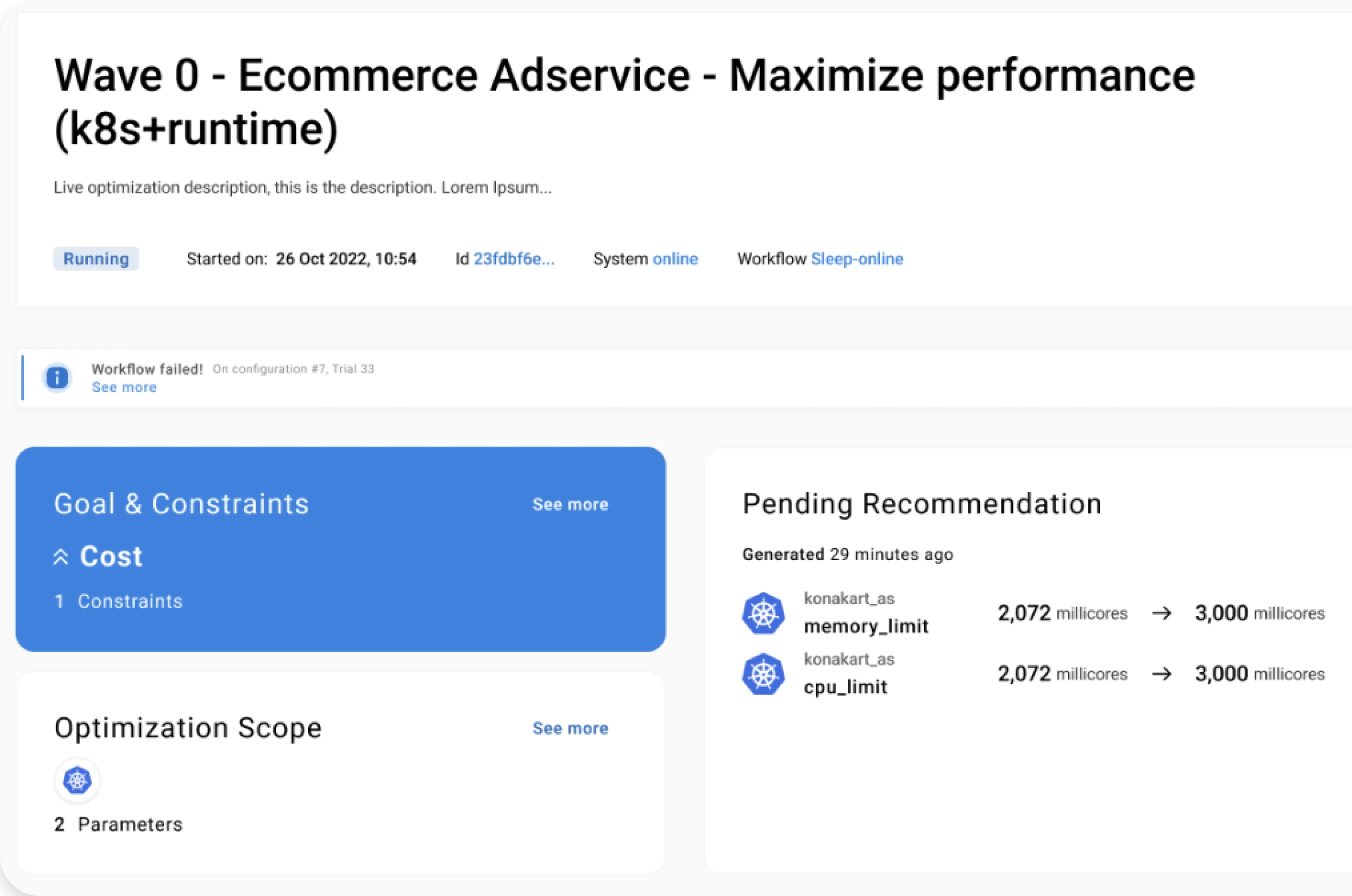

Let’s first focus on application performance. The charts below show how execution time varies with the heap parameters. As you can see, the results are impressive: by tuning V8’s young and old heap generations, we got a performance speedup of 11% and 45% respectively.

Another common question running Node.js applications on the cloud is about resource usage (which relates to cloud costs). Is it possible to reduce CPU or memory footprint of Node.js applications by tuning V8 configurations?

The answer is definitely yes! The charts below show how CPU time varies with the two V8 parameters. We can see massive resource usage savings: by tuning V8 young and old heap generations, the CPU used by the application was reduced by 22% and 68% respectively.

Overall, this Node.js tuning exercise shows two main takeaways:

- Increasing memory assigned for the V8 heap generations, both application performance and resource footprint improve significantly.

- Performance and resource footprint gains stop increasing after some point, and increasing resources beyond that point doesn’t help much.

The first aspect can be explained based on how the V8 garbage collector works: the bigger the heap, the more memory can be allocated before the need to do GC work. This clearly reduces CPU cycles used by the GC. Notice however that, as the heap gets bigger, GC pauses may get longer and start impacting your application tail latency (e.g. P90 and above).

The second aspect is interesting as it shows that a counter-intuitive cost-performance trade-off exists in Node.js applications. If you run your apps with too small a heap, you have poor performance and high CPU usage and costs. If you run with the optimal heap size, you get high performance and minimal costs. If you increase heap memory beyond this point, performance won’t increase and you’ll use more resources and increase costs without benefits.

Lastly, it’s worth noting that the optimal configuration is highly application-dependent: it’s a function of the specific application code, the workload, and the execution environment – including K8s/hypervisor/OS/hardware versions. There is no silver bullet, other than tuning your own applications!

Conclusions

Improving performance and reducing resource usage of Node.js applications is typically approached by tuning the JavaScript code. However, the V8 JavaScript engine powering Node.js applications has 100s of configuration settings that can be exploited by SREs and performance engineers to optimize application performance and reduce resource footprint and cloud costs.

In this post, we have shown that tuning V8 memory management and garbage collection can provide 2x or more application performance and resource efficiency gains, without touching the application code.

In the next blog posts, we will explore how V8 determines default values for heap memory and how this choice can impact Node.js application reliability on Kubernetes.

Stay tuned!

Reading Time:

11 minutes

Author:

Mauro Pessina

Manager, Performance Engineering

Don't miss Akamas content and news

Related resources

See for yourself.

Experience the benefits of Akamas autonomous optimization.

No overselling, no strings attached, no commitments.

© 2024 Akamas S.p.A. All rights reserved. – Via Schiaffino, 11 – 20158 Milan, Italy – P.IVA / VAT: 10584850969